Articles

[1] Queenie Luo, Michael J. Puett, Michael D. Smith. “A Perspectival Mirror of the Elephant: Investigating Language Bias on Google, ChatGPT, YouTube, and Wikipedia”. ACM Queue, 22, 1. January/February 2024

Press: Wikimedia Research Newsletter, Twitter, South China Morning Post

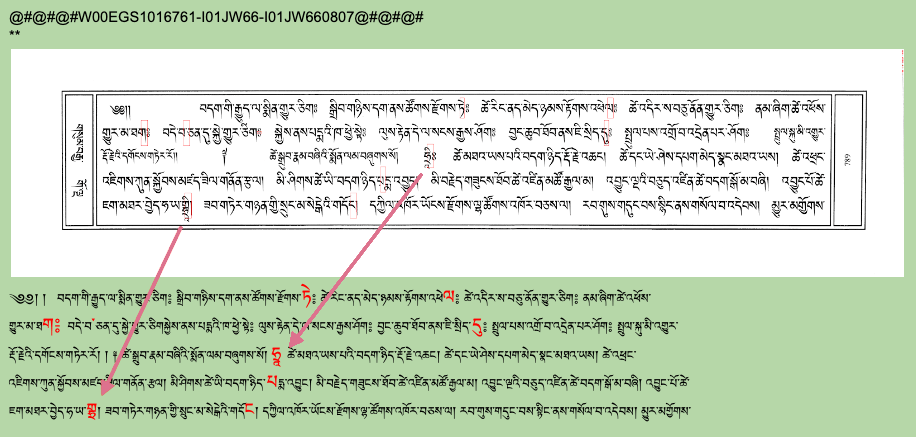

[2] Queenie Luo†, Yung‑Sung Chuang† “Cleansing Jewel: A Neural Spelling Correction Model Built On Google OCR‑ed Tibetan Manuscripts”. ACM Transactions on Asian and Low-Resource Language Information Processing. Just Accepted (April 2024). († indicates equal contribution.)

[3] Queenie Luo, Leonard W. J. van der Kuijp. “Norbu Ketaka: Auto-Correcting BDRC’s E-Text Corpora Using Natural Language Processing and Computer Vision Methods”. (Forthcoming from Tibetan digital humanities and natural language processing. PIATS 2022: Proceedings of the Sixteenth Seminar of the International Association for Tibetan Studies.)

Press/Related Link: Norbu Ketaka database , BUDA

Projects

Norbu Ketaka Database

Project lead: Queenie Luo, Principal Investigator: Leonard W. J. van der Kuijp.

Description: The Norbu Ketaka project employs the latest cutting-edge technologies in AI and deep learning to process one million pages of Tibetan texts. This includes the labeling of training data, adjustments to neural network architectures, and the creation of new Natural Language Processing (NLP) and Computer Vision models. Additionally, a personnel management system was designed and implemented using the Google Docs API and Google Drive API, which facilitated the distribution of 12,000 documents to 40 part-time annotators based on their Tibetan proficiency and time availability, as well as tracking and evaluating the quality of the edited documents. The final “cleaned” texts are donated to BDRC and publicly accessible here.

Press/Related Link: Norbu Ketaka database, BUDA

Kraft

Authors: Queenie Luo, Yafei Chen, Hongsu Wang, Kanghun Ahn, Sun Joo Kim, Peter Bol, CBDB Group

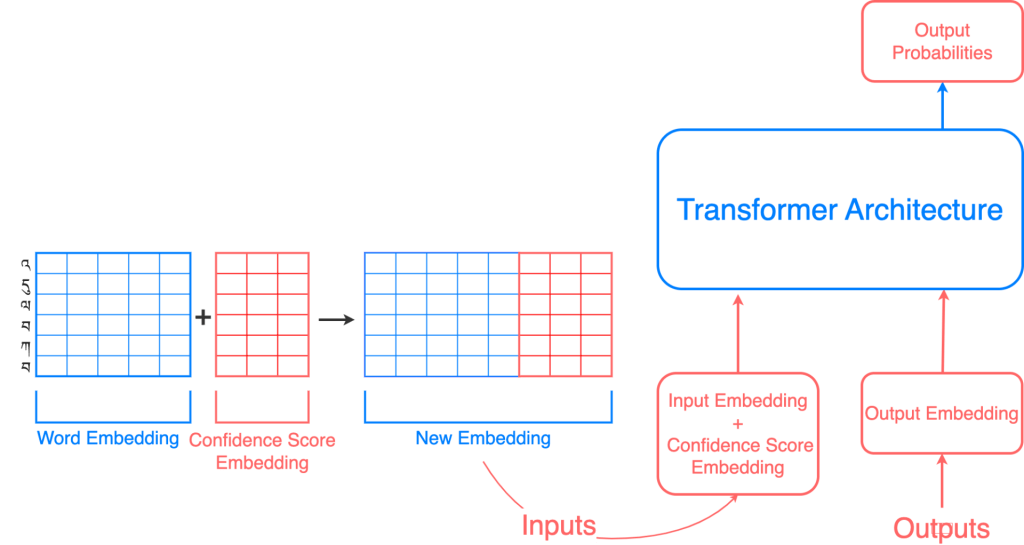

Description: The Kraft (Korean Romanization From Transformer) model translates the characters (Hangul) of a Korean person-name into the Roman alphabet (McCune–Reischauer system). Kraft uses the Transformer architecture, which is a type of neural network architecture that was introduced in the 2017 paper “Attention Is All You Need” by Google researchers. It is designed for sequence-to-sequence tasks, such as machine translation, language modeling, and summarization.

Code: Hugging Face Kraft Model

Lepton

Authors: Queenie Luo, Katherine Enright, Hongsu Wang, Peter Bol, CBDB Group

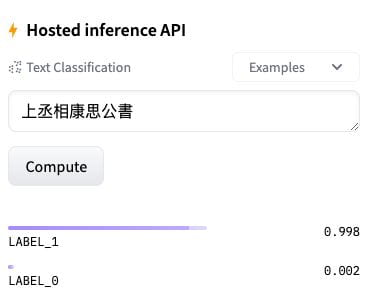

Description: The LEPTON (Classical Chinese Letter Prediction) model is BertForSequenceClassification Classical Chinese model that is intended to predict whether a Classical Chinese sentence is a letter title (书信标题) or not. This model is first inherited from the BERT base Chinese model (MLM), and finetuned using a large corpus of Classical Chinese language (3GB textual dataset), then concatenated with the BertForSequenceClassification architecture to perform a binary classification task. (Labels: 0 = non-letter, 1 = letter) This model helped CBDB efficiently and accurately identify 50,000 letters and build a letter platform for Ming letter association research.